Deep Reinforcement Learning Models Predict Visual Responses in the Brain: A Preliminary Results

Abstract

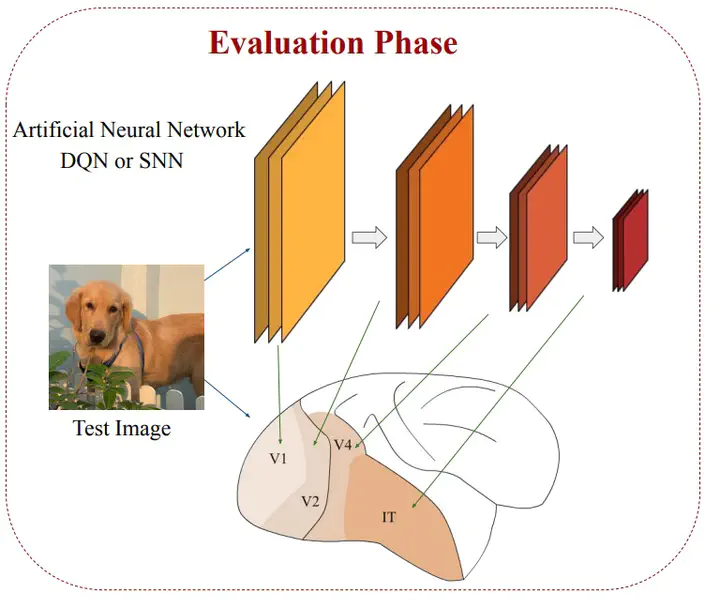

Supervised deep convolutional neural networks (DCNNs) are currently one of the best computational models that can explain how the primate ventral visual stream solves object recognition. However, embodied cognition has not been considered in the existing visual processing models. From the ecological standpoint, humans learn to recognize objects by interacting with them, allowing better classification, specialization, and generalization. Here, we ask if computational models under the embodied learning framework can explain mechanisms underlying object recognition in the primate visual system better than the existing supervised models? To address this question, we use reinforcement learning to train neural network models to play a 3D computer game and we find that these reinforcement learning models achieve neural response prediction accuracy scores in the early visual areas (e.g., V1 and V2) in the levels that are comparable to those accomplished by the supervised neural network model. In contrast, the supervised neural network models yield better neural response predictions in the higher visual areas, compared to the reinforcement learning models. Our preliminary results suggest the future direction of visual neuroscience in which deep reinforcement learning should be included to fill the missing embodiment concept.